Web scraping provides one of the paths to get such information. To get you started, you'll need to learn different angles of fetching data from the web using R. Fetching Data from a Single Table or Multiple Tables on an HTML Webpage. Oct 19, 2020 Scraping books The `rvest` package is used in R to perform web scraping tasks. It’s very similar to `dplyr`, a well-known data analysis package, due to the pipe operator’s usage and the behavior in general. We know how to get to certain elements, but how to implement this logic in R? I would like to scrape information from a webpage. There is a login screen, and when I am logged in, I can access all kinds off pages from which I would like to scrape information (such as the last name of a player, the object.lastName). I am using R and the packages rvest and httr.

In this tutorial we will be covering scraping Indeed jobs with R and rvest. You will be learning how to exactly locate the information you want and need in the HTML document. At the end, we will have developed a fully functioning scraper for your own use.

Before we jump in, here and here is a blog post about the analysis of the scraped data. Specifically, we wanted to know how to become a Data Scientist. We looked at the level of education required, what majors you should pick, and what technologies are most important to know.

The second post is about the differences between a Data Scientist, a Data Analyst, and a Data Engineer.

Now let’s jump in!

Working in a technology driven industry, it is very important to keep up with current trends. Particularly, when one is working in the field of Data Science where no one knows exactly the boundaries between a Data Scientist, a Data Analyst, or a Data Engineer. Moreover, let alone what kind of skills are required for each position. Therefore, we will be attempting to clarify what it takes to become a Data Scientist by developing a webscraper for Indeed job postings.

Web scraping Indeed jobs with R and can easily be accomplished with the rvest package. With this package, getting the relevant information from Indeed’s website is a straight forward process.

So let’s start with what we will be covering:

- How to get job titles from Indeed’s website.

- How to get job locations.

- How to look for company names.

- How to scrape all summary descriptions for each job.

- Building an entire scraper by putting all parts together.

First, we will be loading the required packages for this tutorial.

Then we’ll have a look at how to get job titles from the web page. We want to look for Data Scientist jobs in Vancouver, Canada.

After we are done with that, we will copy the link address and store the URL in a variable called url. Then we will use the xml2 package and the read_html function to parse the page. In short, this means that the function will read in the code from the webpage and break it down into different elements (<div>, <span>, <p>, etc.) for you to analyse it.

In the code below, we will show you how to get the page into R in order for you to analyse it.

After we are done, we are ready to extract relevant nodes from the XML object.

We call elements like divs (<div>), spans (<span>), parapgraphs parapgraphs (<p>) or anchors (<a>) nodes, after they have been parsed by the xml2::read_html() function. Besides element nodes there are also attribute nodes and text nodes.

But wait… how do we know where to find all the relevant information we are looking for? Well, that is the hard part of developing a successful scraper. Luckily, Indeed’s website is not very hard to scrape.

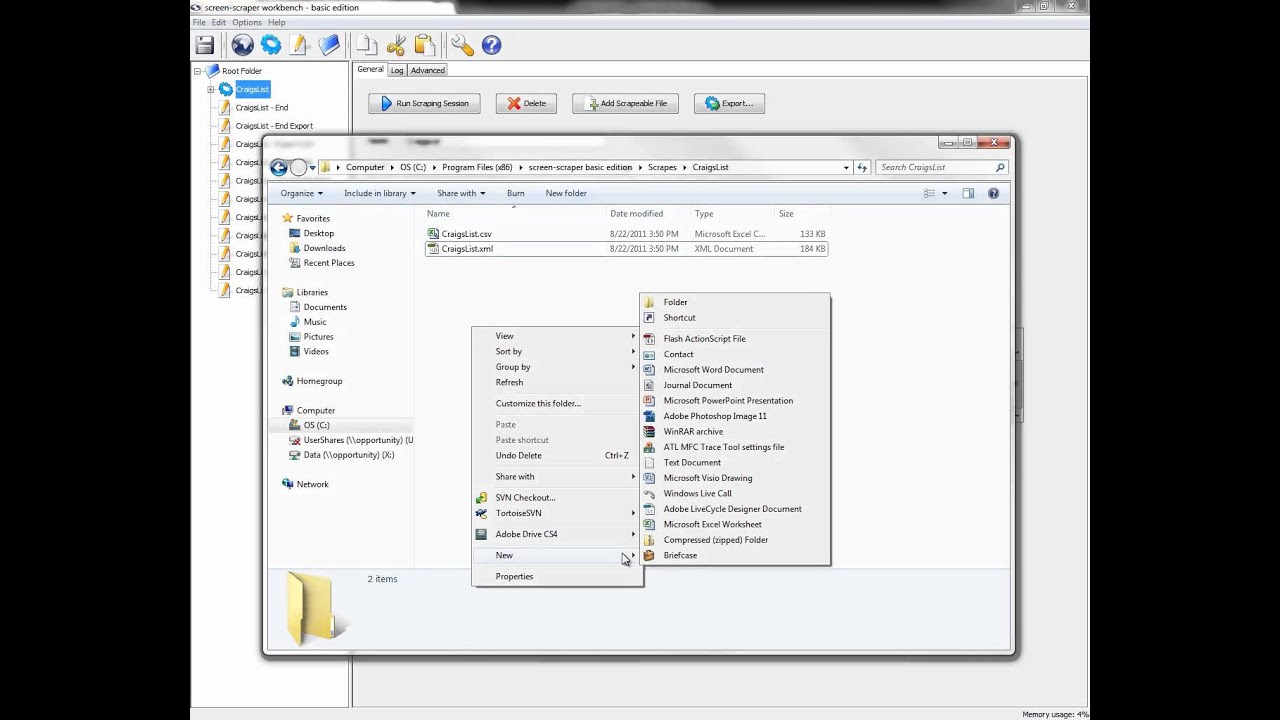

So, what we will be doing is looking at the source code of the website and also inspecting individual elements.

Let’s first inspect the code. For windows, you can do a right-click and then select inspect when you are on Indeed’s website. This should look like this:

Then, click on the little arrow in the top right corner and hover over elements on Indeed’s website.

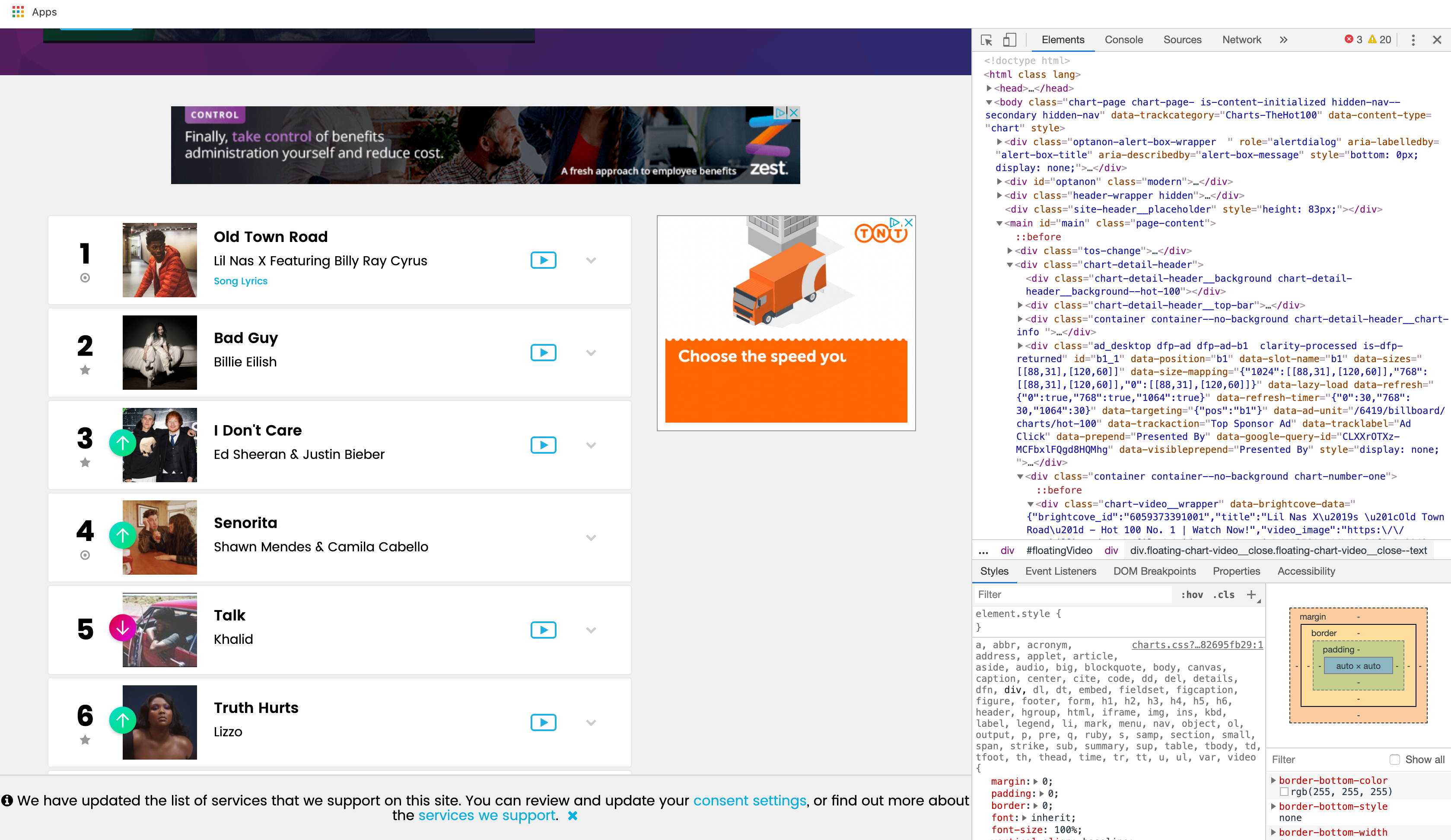

By doing that you can see that the corresponding code on the right-hand side gets highlighted. The job title Data Scientist and Statistician is located under the anchor tag. If we look more into it we can also see the it is located under the jobtitle CSS selector and under the xpath a[@class=”jobtitle”]. This makes it so much easier to find individual pieces on a website. Now you might ask yourself what CSS Selectors are and what a xpath is.

Xpath: a path to specificly extract certain parts from a tree-structured document such as XML or HTML. The path can be very specific and makes it possible to grab certain parts from a website easily.

CSS Selectors: A CSS selector has a similar function to xpath. Namely, locating certain nodes in a document and extracting information from these nodes. Every CSS selector can be translated into an equivalent xpath but not the other way around.

Here is an example of how the syntax of a xml path works: //tagname[@attribute = “value“]

Now let’s have a look at a html code snippet on Indeed’s website:

Here we can see that there is an attribute data-tn-element which value is ”jobTitle“. This particular attribute is under the anchor node. So let’s construct the xpath:

//a[@data-tn-element = “jobTitle“]. And voila we get all job titles. You’ll notice that we have included //* instead of //a in our code below. The star acts as a wildcard and selects all elements or nodes not just the anchor node. For Indeed’s website, the attribute data-tn-element is always under the anchor node so the wild card symbol wouldn’t be necessary.

So let’s recap what we have done:

- We looked at the source code and identified that the jobtitle is located within the anchor

<a>and<div>nodes. - Then we looked at the attribute

data-tn-elementwith the value “jobTitle“. - From there, we grabbed the “title” attribute and extracted the information.

Don’t be descouraged if it looks somwhat complicated at first. It takes some time to get used to the structure of a HTML document. If you have a basic notion of what a xpath is, about different nodes and how you can select elements from a document, then you can start trying. Keep selecting different nodes and different attributes until you are happy with your results. Especially at the beginning, there is a lot to learn from web scraping and it’s trial and error until you get the information you want.

In the code below we are selecting all div nodes and specify the xpath from where we grab the attribute title to get all job titles from the website.

Alternatively, we could have specified a CSS selector as well. This would look like this:

Either way, we are getting all the job titles from the website.

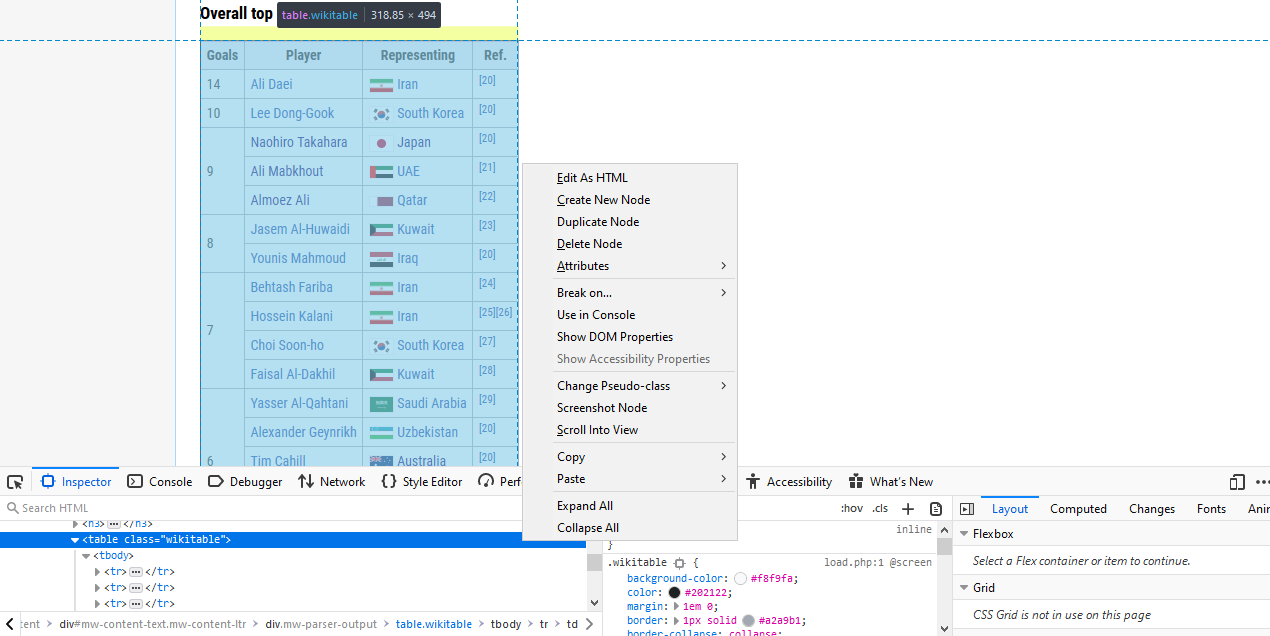

Let’s move on to the next step. Getting a job location and the company. First, let’s have a look at the source code and find out where company names and locations are located in the document.

The next picture shows where the company name is located.

We can see that company location and name are located in the <span> element with a class attribute value of location and company respectively.

Let’s see how we can extract this information from the document. First we’ll specify the xpath.

Now, we are getting the same exact information just with the corresponding CSS selectors.

R Web Scrape Javascript

Lastly, we want to get the job description from every single job on the website. You’ll notice, that on the current page, there is just a little meta description of the job summary. However, we want to get the full description of how many years of experience we need, what skill set is required, and what responsibilities the job entails.

Web Scrape In R

In order to do that we have to collect the links on the website. We do that with the following code.

After we have collected the links we can now locate where the job description is located in the document.

Looking at the picture above we notice that the job description is in a <span> element with a class attribute values of jobsearch-JobComponent-description icl-u-xs-mt–md. Let’s have a look at the code below.

That was the majority of our work! We are now done scraping Indeed jobs with R and can focus now on building a functioning scraper. That means, we have to put all the different parts together. One more thing we have to implement in our scraper are multiple page results.

We can do that by messing with the URL in our code. Notice what happens when we click on page number 2 until the end.

We have to manually find out how many page results Indeed’s website returns for our query. When we have completed that then we are finally ready to build the scraper. Let’s go!

The last page when we built the scraper was 190. So we are specifying page_result_end to be 190 and the starting page, page_result_start, to be 10.

Afterward, we are initiating full_df and then starting the for loop. We are scraping the job title, the company name, the company location, and the links. Then we are starting the second for loop where we are collecting all the job summaries from page result 1. Then we are putting all our scraped data into a data frame and go on to page 2. We do that until we have reached the last page and we are done.

I scraper currently only does one city at a time. However, you can easily expand on that and add another for loop where you can specify from which cities you want to scrape data. I manually changed the URLs and scraped data for Vancouver, Toronto, and Montreal. Afterward, I put all postings in a data frame where it is ready for analysis in our next blog post.

R Web Scrape

I hope you have enjoyed scraping Indeed jobs with R and rvest. If you have any questions or feedback, then let me know in the comments below.

R Web Scrape Tutorial

Ucr Ebuy

Here are some resources that you might find interesting: